Introduction: The Growing Importance of AI Ethics in 2025

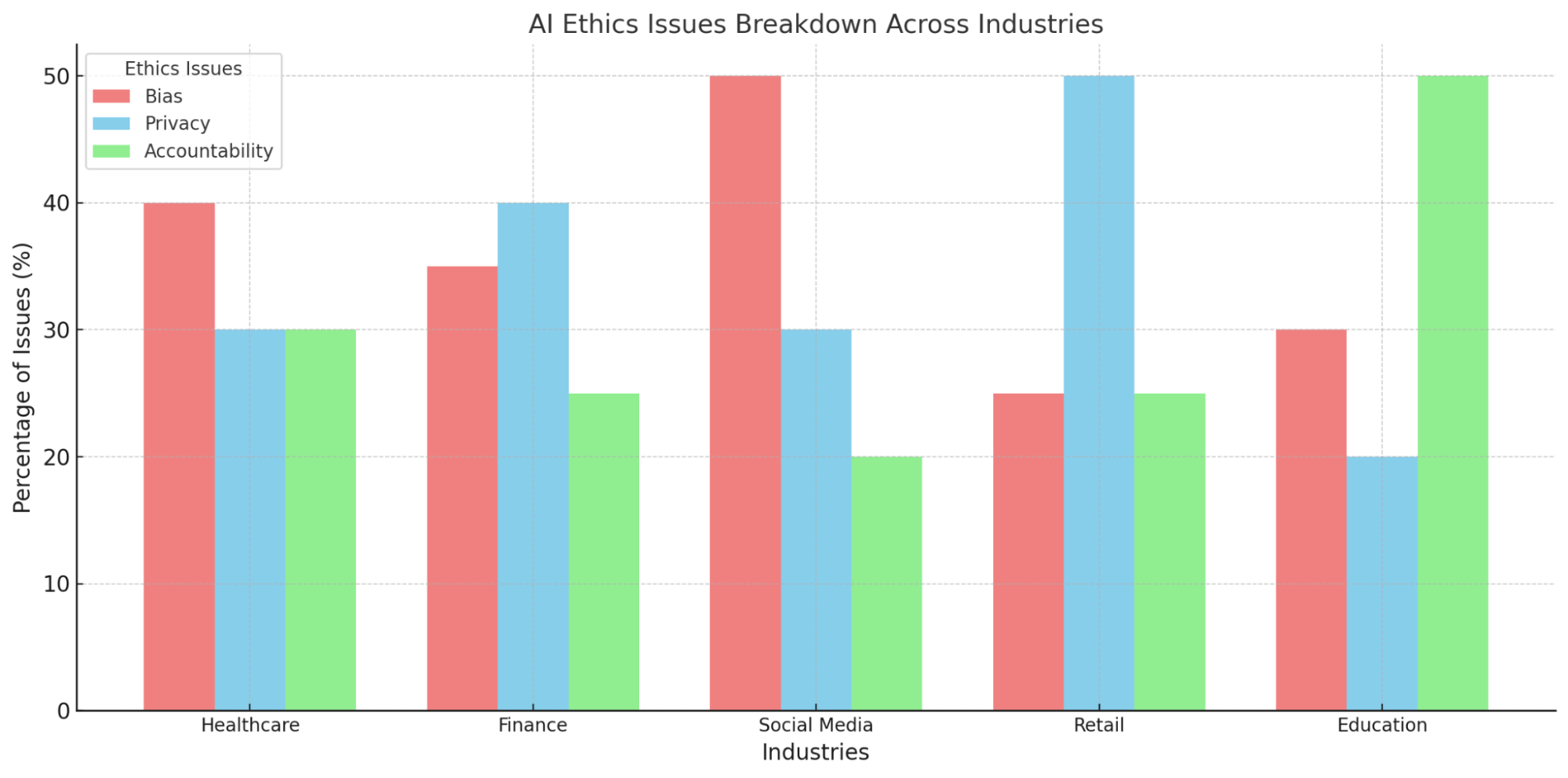

Artificial Intelligence (AI) is becoming more pervasive in our lives, from automated decision-making systems in finance to personalized healthcare treatments and predictive analytics in business. However, as AI technologies continue to advance, the ethical issues surrounding them—particularly bias, privacy, and accountability—become more pressing.

In 2025, businesses, governments, and societies must grapple with these ethical concerns to ensure AI technologies benefit humanity without causing harm or perpetuating inequality. This blog post delves into the major ethical challenges posed by AI, offering real-world examples, solutions, and best practices for navigating these issues.

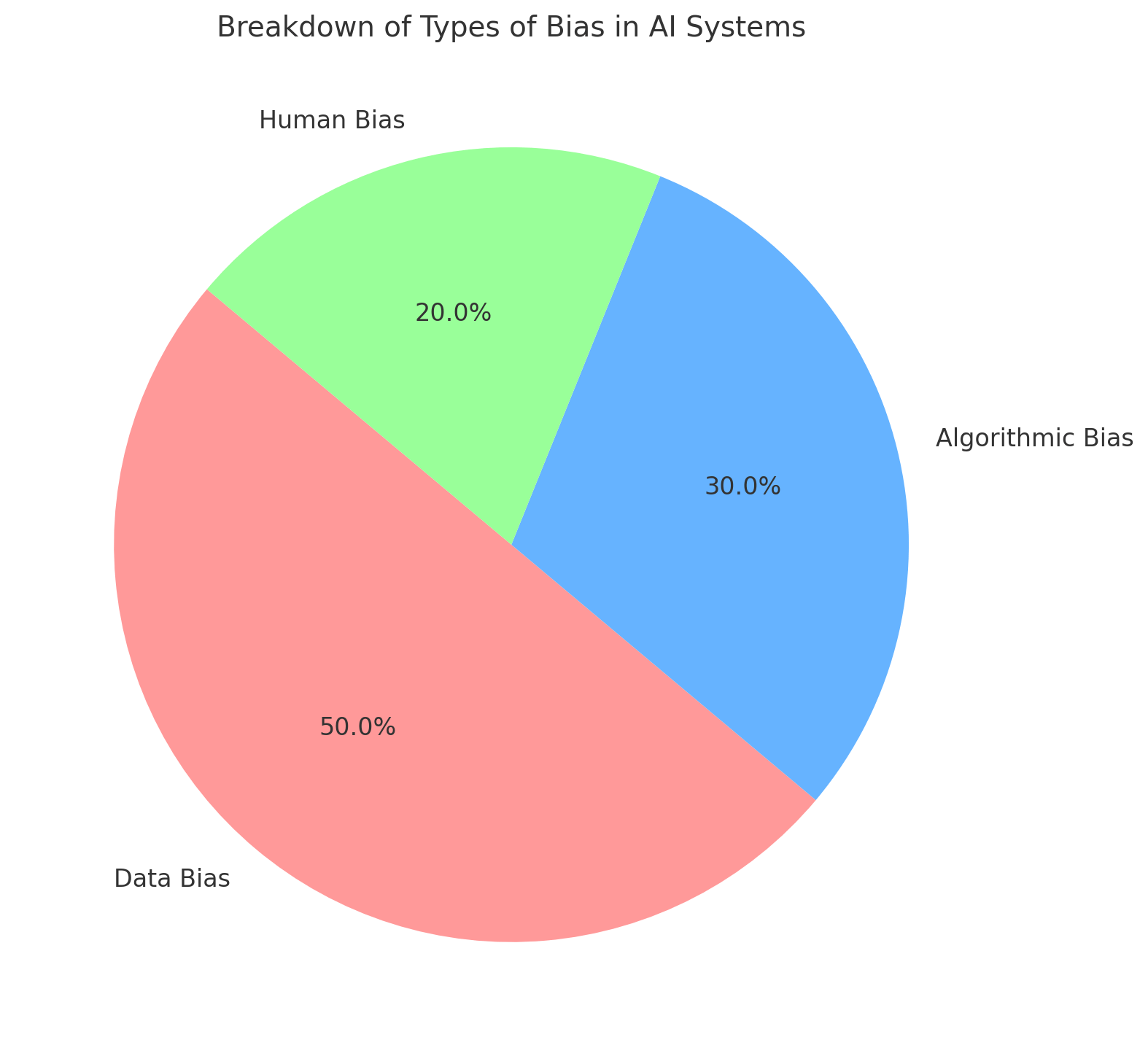

1. The Challenge of Bias in AI: Unpacking the Issue

AI systems are trained on vast amounts of data, and the quality of the data often dictates the behavior of AI models. However, if the data used to train AI is biased—either due to historical data imbalances or flawed data collection methods—AI systems can perpetuate or even amplify these biases, leading to discriminatory outcomes.

Key Areas of Bias:

- Data Bias: AI models trained on biased datasets can lead to skewed predictions and unfair outcomes.

- Algorithmic Bias: Even if the data is unbiased, the algorithms themselves can introduce bias.

- Human Bias: Developers' unconscious biases can influence the design and functioning of AI systems.

Real-World Examples of Bias:

- Bias Detection and Auditing: Regular audits of AI models for bias are crucial. AI developers can use tools to detect and correct biases in the training data and models.

- Diverse Data: Incorporating a broader range of data sources and ensuring that the data is representative of diverse populations can help mitigate bias.

- Human-in-the-loop Systems: In many applications, AI can be used to support human decision-making, allowing humans to intervene when the AI system shows signs of bias.

2. AI and Privacy: Protecting User Data in the Age of Smart Systems

As AI systems become more integrated into everyday life, they increasingly rely on vast amounts of personal data to function effectively. While data collection can enhance AI capabilities, it also raises significant concerns about privacy, security, and the potential misuse of sensitive information.

Key Privacy Concerns:

- Data Collection: AI systems often require personal data, such as location, health information, and browsing habits, to deliver personalized services.

- Surveillance: AI-powered surveillance systems raise concerns about privacy infringement, particularly in public spaces.

- Data Ownership: As AI systems utilize user data, questions around ownership and control of that data become increasingly important.

Real-World Examples of Privacy Violations:

- Cambridge Analytica Scandal: Personal data from millions of Facebook users was harvested and used to manipulate political campaigns.

- Smart Home Devices: Smart devices such as Amazon Echo and Google Home collect large amounts of data on users, sometimes without their full awareness.

Solutions and Mitigations:

- Privacy by Design: AI systems can be designed with privacy in mind, ensuring that personal data is only collected when necessary, stored securely, and anonymized when possible.

- Regulatory Compliance: Companies should adhere to global privacy regulations like the GDPR (General Data Protection Regulation) to protect user data and maintain transparency.

- User Consent: Obtaining explicit consent from users before collecting and using their personal data is crucial for maintaining privacy.

3. Accountability in AI: Who Is Responsible When Things Go Wrong?

One of the most pressing ethical concerns regarding AI is accountability. When AI systems make mistakes or cause harm—such as a misdiagnosis in healthcare or a wrongful arrest due to facial recognition—who is responsible? Is it the developers, the organizations using the technology, or the AI systems themselves?

Key Accountability Challenges:

- Lack of Transparency: Many AI models, especially deep learning algorithms, are often referred to as "black boxes" because their decision-making processes are opaque.

- Autonomy vs. Human Control: As AI systems become more autonomous, determining where human responsibility ends and AI's begins becomes increasingly difficult.

- Liability: In cases where AI causes harm, it’s often unclear who should be held legally responsible for the actions of the AI system.

Real-World Examples:

- Self-Driving Car Accidents: In 2018, an Uber self-driving car killed a pedestrian. Questions about who was responsible—Uber, the car’s software developers, or the human safety driver—remained central to the legal case.

- Medical AI: In 2023, an AI-driven diagnostic system misdiagnosed patients, leading to several instances of incorrect treatments and lawsuits against the healthcare providers using the AI tool.

Solutions and Mitigations:

- Explainability in AI: AI models should be designed with transparency in mind, allowing users to understand how decisions are made. This can help attribute responsibility in case of errors.

- Clear Regulatory Frameworks: Governments and organizations should work together to establish clear legal frameworks that define AI accountability.

- AI Governance: Developing ethical AI guidelines and governance structures within organizations can help ensure that AI technologies are used responsibly.

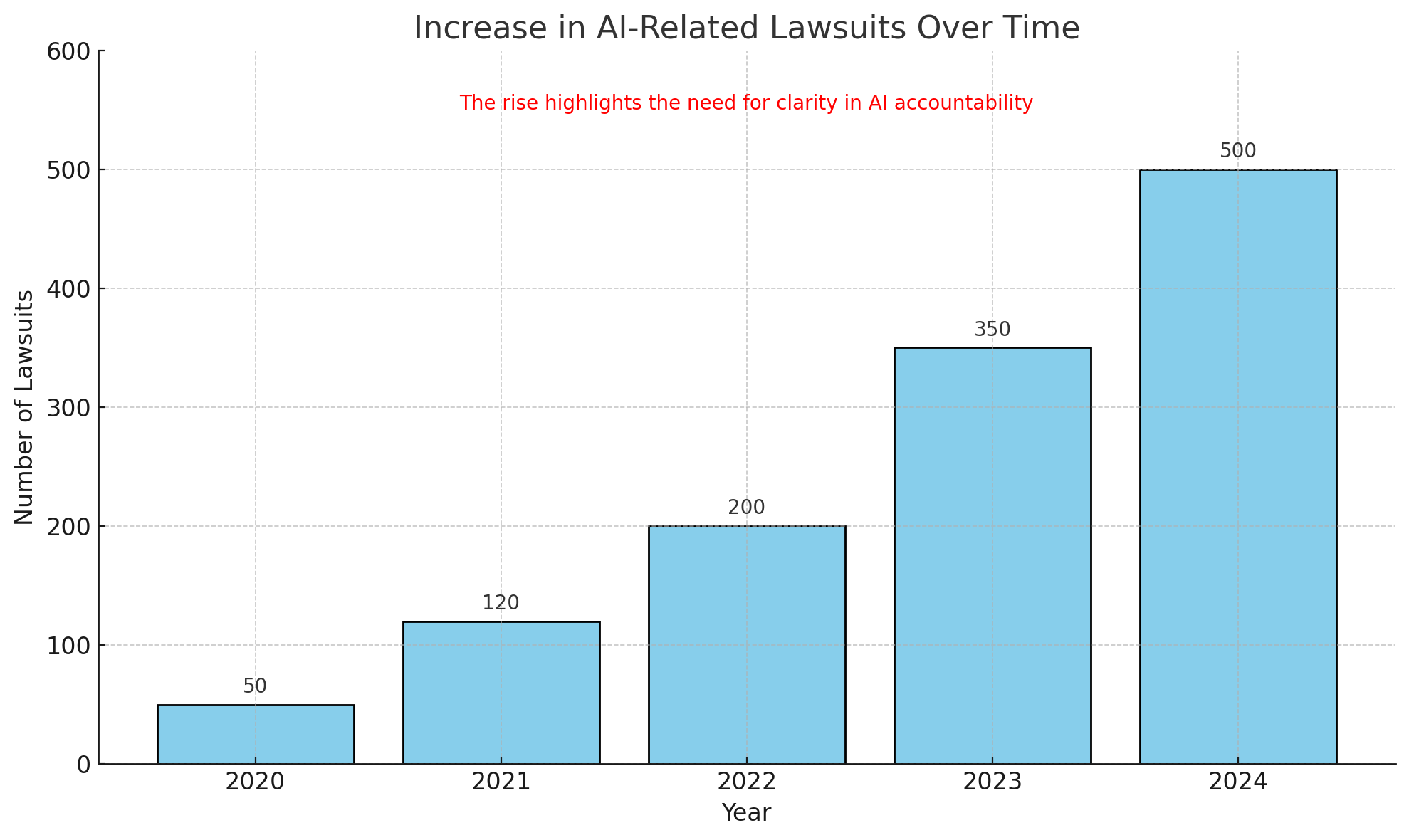

The increase in AI-related lawsuits over the years, highlighting the growing need for clear accountability as AI systems become more autonomous. The trend underscores the urgency of addressing legal and ethical considerations in AI deployment.

4. The Role of Governments and Regulators in AI Ethics

Governments and regulators play a crucial role in ensuring AI technologies are developed and used ethically. As AI systems become more pervasive, the regulatory landscape must evolve to address emerging ethical challenges such as bias, privacy, and accountability.

Key Regulatory Challenges:

- Global Standards: The global nature of AI technology requires international cooperation to establish universal ethical standards.

- Adapting to Rapid Change: The fast pace of AI development often outstrips the ability of regulators to keep up with new innovations.

- Balancing Innovation and Ethics: Regulators must strike a balance between encouraging AI innovation and ensuring that ethical standards are not compromised.

Real-World Examples:

- GDPR: The European Union’s General Data Protection Regulation (GDPR) is one of the most comprehensive data privacy laws, setting a precedent for other countries to follow.

- AI Act: The European Commission has proposed the AI Act, aiming to regulate AI technologies by categorizing them into different risk levels and implementing specific compliance requirements.

Solutions and Mitigations:

- Collaboration Between Stakeholders: Governments, businesses, and academic institutions should collaborate to create a comprehensive AI regulatory framework.

- Ethical AI Guidelines: Developing clear guidelines for AI development and deployment, including regular audits and assessments, can help ensure compliance with ethical standards.

- AI Ethics Boards: Establishing independent AI ethics boards within organizations can guide the development of AI technologies with ethical considerations in mind.

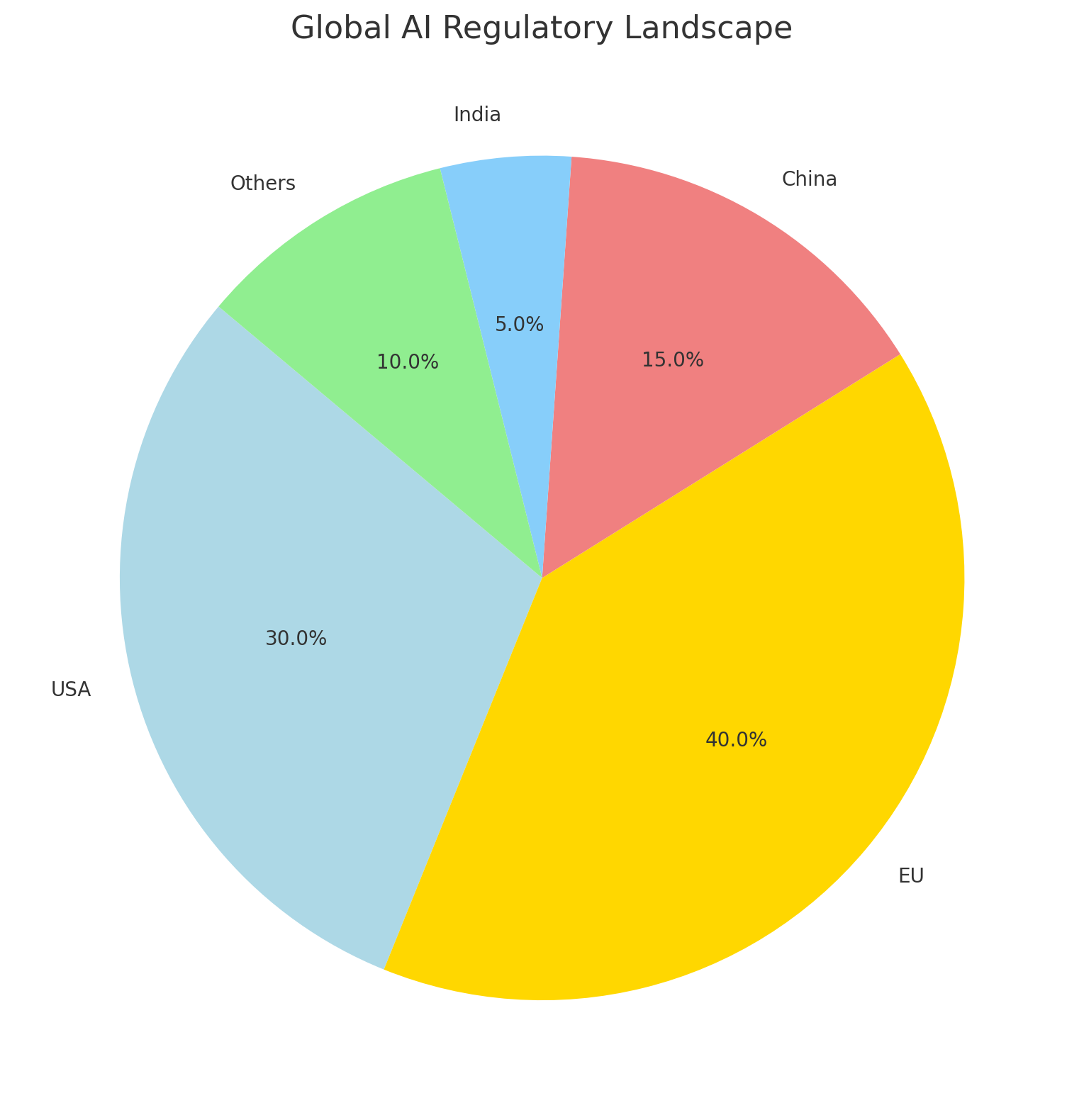

Global AI regulatory landscape, showing how different countries are addressing AI ethicS through legislation.

The data emphasizes the diverse approaches taken by major players like the USA, EU, and China, along with contributions from other regions.

5. Addressing the Future of AI Ethics: The Role of Businesses and Developers

Businesses and developers must take proactive steps to ensure that AI technologies are developed ethically. This involves integrating ethical considerations into the entire AI lifecycle, from design to deployment.

Key Areas for Ethical AI Development:

- Diversity in Development: AI development teams should be diverse to minimize biases in design and ensure the AI solutions are equitable and inclusive.

- Continuous Monitoring: Ongoing monitoring of AI systems in operation is necessary to detect and correct any ethical issues, such as biases or privacy breaches.

- Ethical AI Education: Developers should receive training on AI ethics, ensuring they understand the potential societal impacts of their work.

Real-World Examples:

- IBM’s Ethical AI Principles: IBM has established a set of ethical principles for AI development, emphasizing fairness, transparency, and accountability in AI systems.

- Google’s AI Principles: Google has committed to developing AI technologies that are socially beneficial and aligned with ethical standards, including ensuring fairness and privacy.

Solutions and Mitigations:

- AI Ethics Training Programs: AI developers and engineers should undergo regular ethics training to stay updated on best practices for building fair and responsible AI.

- Transparency and Open-Source Tools: Open-source AI tools and transparent development processes can help ensure that ethical considerations are incorporated into the software.

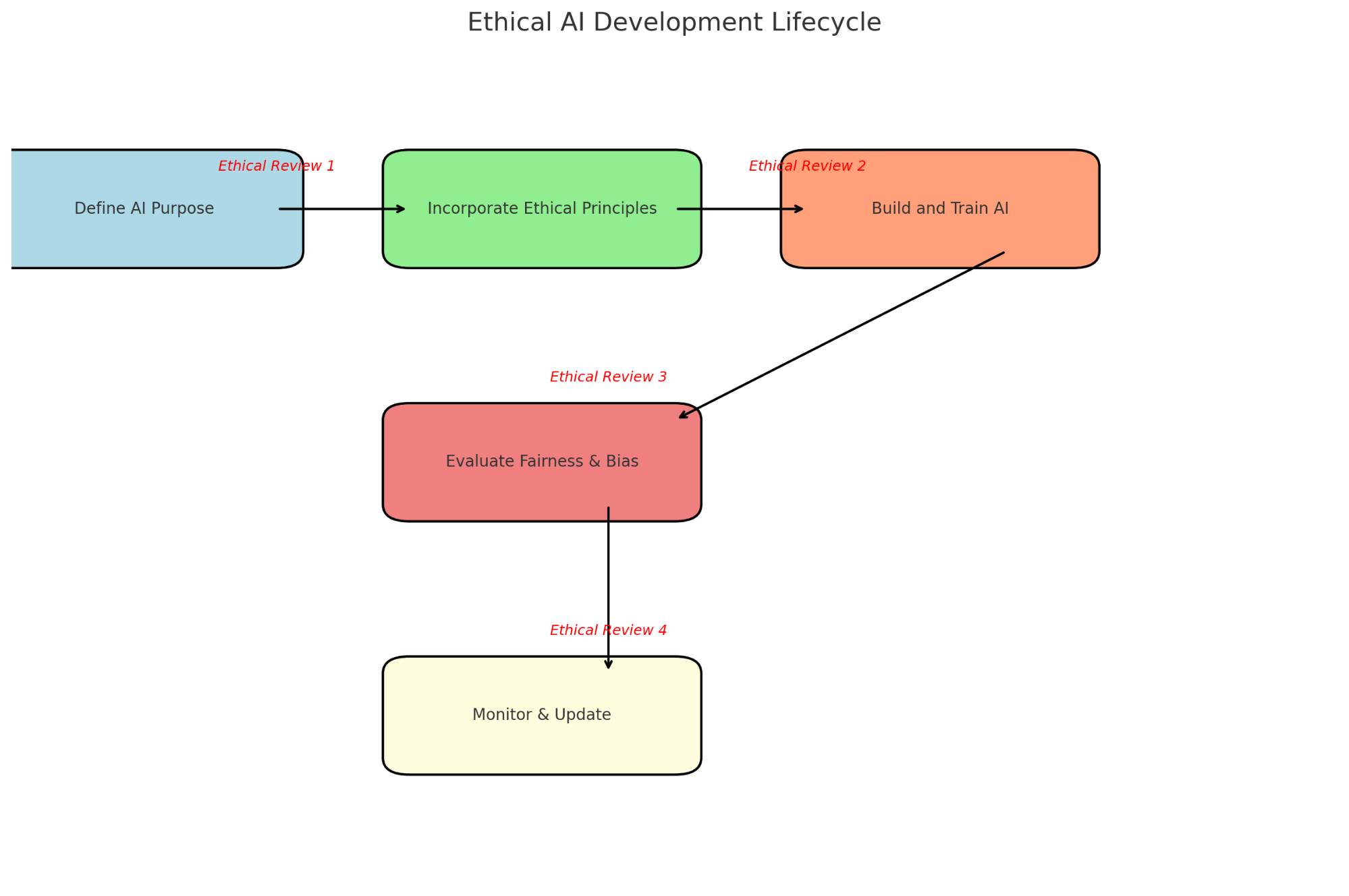

Ethical AI development lifecycle, from concept to deployment, highlighting areas for ethical review and intervention.

This flowchart illustrates the ethical AI development lifecycle, emphasizing key stages from concept to deployment. It highlights critical points for ethical review and intervention, ensuring fairness, transparency, and accountability at every phase of the AI system's lifecycle.

Conclusion: Navigating the Road Ahead for AI Ethics

As AI technologies continue to evolve in 2025 and beyond, navigating the ethical challenges of bias, privacy, and accountability will be crucial. By addressing these concerns proactively, businesses, governments, and developers can ensure that AI serves humanity in a fair, transparent, and responsible manner. With ongoing collaboration, regulatory frameworks, and a commitment to ethical practices, AI has the potential to revolutionize industries while respecting the rights and dignity of individuals.

FAQs

As AI continues to play a critical role in industries like healthcare, finance, education, and law enforcement, the potential for unintended consequences, such as bias, discrimination, and misuse, has grown. In 2025, the ethical implications of AI are under greater scrutiny to ensure fairness, transparency, and accountability in AI systems, particularly as they influence more significant decisions impacting society.

Key challenges include ensuring data privacy, mitigating algorithmic bias, preventing misuse of AI technologies, and maintaining transparency in AI decision-making. Additionally, issues like accountability for AI-driven actions and potential job displacement due to automation highlight the need for robust ethical frameworks in AI development and deployment.

In 2025, governments and organizations are increasingly adopting AI ethics guidelines, implementing regulatory frameworks, and forming ethics committees to oversee AI use. Initiatives like requiring transparency reports, mandating ethical audits, and collaborating with interdisciplinary experts help ensure responsible AI development. Many companies are also integrating ethical considerations into their AI strategies from the design phase.

Individuals and communities play a vital role by advocating for ethical AI practices, raising awareness of potential biases, and demanding accountability from AI developers and users. Public participation in policy discussions, collaboration between technologists and ethicists, and continuous education about AI's societal impacts help create a collective effort to promote fairness, inclusivity, and responsible AI use.